Augmented Curiosities: Virtual Play in African Pasts and Futures is an immersive exhibition that places African material culture in the hands of its visitors. As an Innovator-in-Residence for Media Technology & Innovation, I developed the project through collaborations with the Northwestern University Library, most specifically the Melville J. Herskovits Library of African Studies, and Northwestern IT. Augmented Curiosities is designed to promote the Herskovits’ incredible object collection and provoke conversations about the use of Virtual Reality (VR) and Augmented Reality (AR) within the storytelling strategies of museums.

I’m an archaeologist and a curator. Within these disciplines, I use material-based methods to explore my interests in expressive and tangible culture as a force of preservation – especially within cultures that do not rely upon the written record. Archaeologists understand that objects and artifacts often embody characteristics of the people and processes that created them. Craftspeople, artists and producers of many practices embed their unique perspectives within the fabric of their works. The energy of an individual, community or landscape can often be gleaned from the materials it produces. Because of this, we curators often use objects to tell stories of the human past and widen an audience’s capacity for imagination. I’m particularly interested in widening imaginations about African pasts and futures. And curation provides me intellectual space to produce scholarship as an artist within an archaeological practice. I work to engage that artistry through experimentation with technology and digital media. Augmented Curiosities is my most recent effort towards that end.

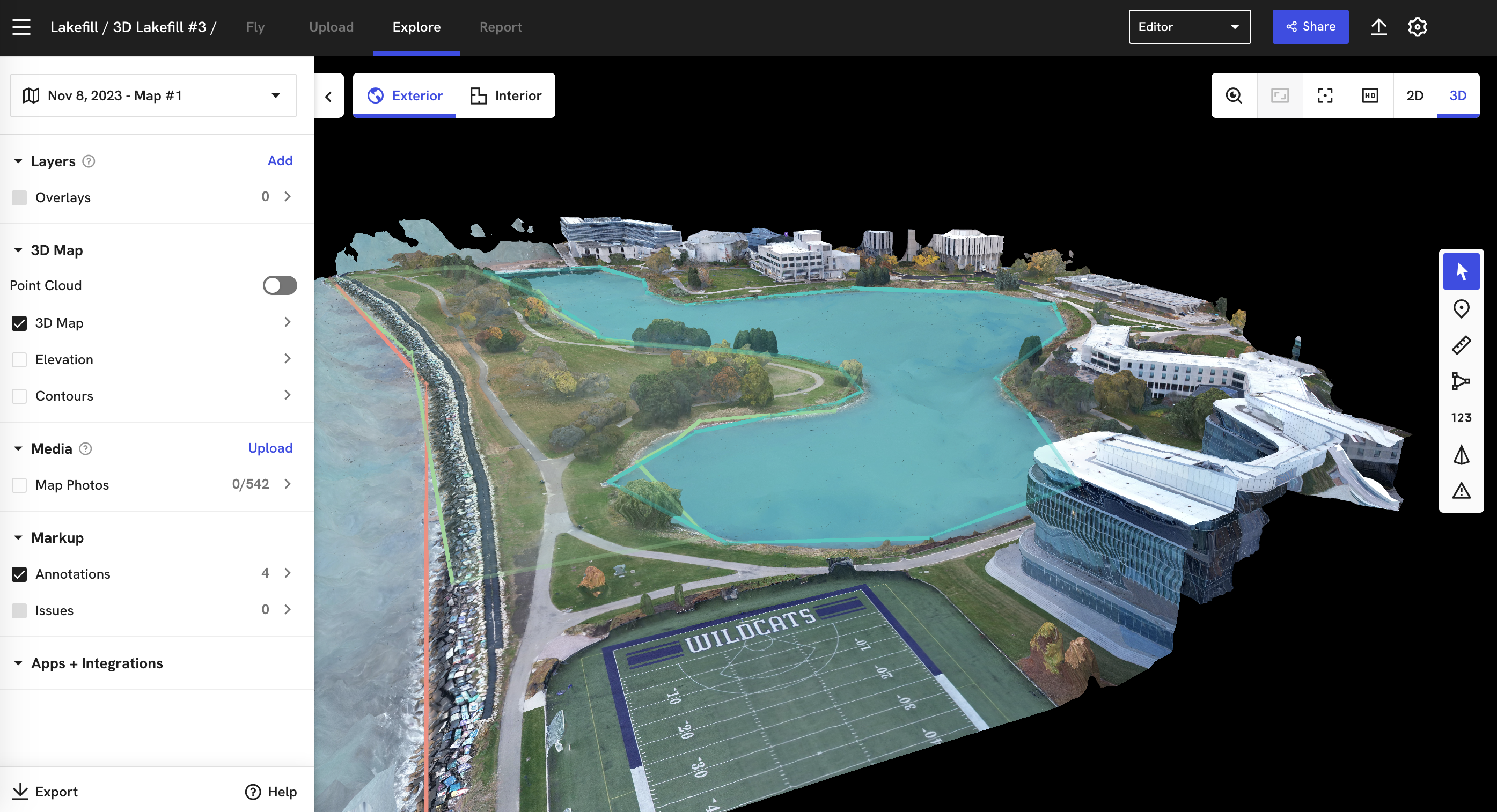

For this project, I sought to find ways to place fragile, valuable or otherwise inaccessible objects from the Herskovits Library of African Studies collection into the hands of visitors. To accomplish this, our team integrated Virtual Reality and Augmented Reality experiences within an exhibition, that allowed visitors to intimately engage the objects. We used photogrammetry and 3D modeling to create a digital replica of each object and the exhibition cabinet in which they are housed. We then made those replicas accessible through the development of a VR application in Unity Game Engine and a web AR experience through the 8thwall platform. This curatorial framework allowed me to express historical, archaeological and anthropological data as time, space and experience. The VR and AR content delivery strategies offer visitors an immersive experience in which they may virtually remove the objects out of the glass-paned exhibition cabinet and play with them. Through this we created a product that was both educational and entertaining – simultaneously exhibiting emerging technologies and relatively hidden objects.

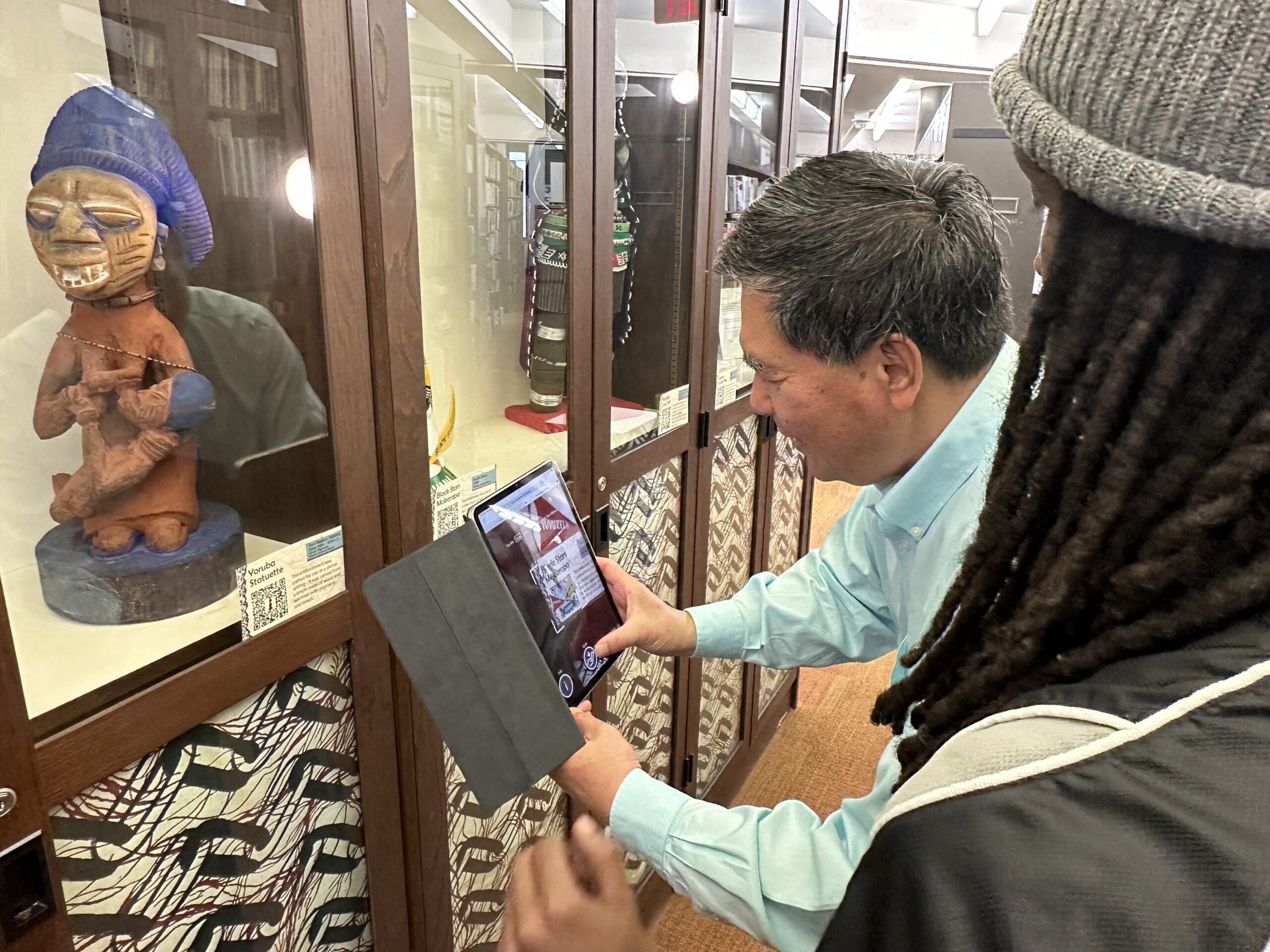

Northwestern University Dean of Libraries, Xuemao Wang, trying out the Augmented Reality Experience

Northwestern University Dean of Libraries, Xuemao Wang, trying out the Augmented Reality Experience

Augmented Curiosities was partly inspired by my interest in affective curation. I believe objects possess energy that humans transform into meaning. We deploy our personalities, emotions and unique lived experiences to make sense of our physical surroundings. I want to curate this phenomenon. Rather than highlighting geographic origins or manufacture dates, I am far more interested in allowing a visitor to have experiences with material culture. I sought to exhibit the feeling of the objects as opposed to prescribing their meanings. To archive this within the exhibition, we produced video interviews from Northwestern University community members that contextualize each object within emotional, political, spiritual and familial landscapes. Interviewees were prompted to respond to the question: “What does this object mean to you and how does it make you feel?” The videos of their testimonies create an atmosphere of intimacy as visitors explore within the VR experience. Affective curation allows me to invert some of the power dynamics of exhibition development, delegating parts of the knowledge-production process to the communities that the exhibition engages. I am eager to further develop this theory and methodology throughout my career.

This experience affirmed for me the expansive opportunities offered by digital curation. The Augmented Curiosities project facilitated the production of a new archive – consisting of 3D models, video, audio and literature. The project created and consolidated a dynamic range of new and existing resources that support cursory browsing or rabbit-hole deep dives into the objects, communities and research that constitute the exhibition. Following the guidance of Esmeralda Kale, the curator of the Herskovits Library of African Studies, I created an Augmented Curiosities LibGuide which preserves the exhibition within the Northwestern University Library database. It is my hope that this rootedness within the digital infrastructure of the Library will encourage further inclusion of digital resources, such as 3D models and expert testimonies, within the University’s digital repositories.

Augmented Curiosities is the product of ambitious ideation and incredible institutional support. An expanse of Northwestern University units contributed time, expertise and funding to produce this unprecedented exhibition. I am grateful and indebted to the talented group of developers, engineers, scholars and technicians that believed in my idea and assisted its creation. Augmented Curiosities is a testament to the worthiness of interdisciplinary research and the ease in which meaningful cross-campus collaboration can occur at Northwestern University.

Personally, this project has been a tremendous success. I tested hypotheses, gained methodological training, developed theory and shared a product of the process with the Northwestern University community – an exemplary graduate student research experience that I’m extremely grateful for. Ultimately, it is my hope that Augmented Curiosities makes evident the power of immersive visualization techniques and encourages visitors to critically reflect upon the value proposition of exhibitions and the museum industry.